Here in Massachusetts, with the March winds whipping and snow always a threat, a week’s vacation down south is common fantasy. Even if it means a 10-hour car ride, most of us relish the thought.

But suppose our usual set of wheels, a Mini Cooper, say, is in the shop. (Potholes the size of craters are a common reality here.) Instead of foregoing our vacation, we decide to rent a vehicle. Chances are another Mini Cooper won’t rank as our first choice. Sure, a car that size could get us from Boston to the Outer Banks. But at what cost to our comfort and cargo?

We can think of study designs as kinds of road trips, and our eClinical tools as vehicles. Randomized controlled trials (RCTs) and registry studies are only two such journeys, but they’re two of the most frequent we in the research community take. In both cases, most of us rely on electronic data capture (EDC) to help us reach our destination.

How do we choose the EDC “vehicle” that will get us there safely, with minimal delays? Marquee brand names matter less than road-tested features. Consider the relative importance of these EDC features in RCTs versus registries.

| Feature | RCTs | Registries |

| Automatic reporting and notification | Important, especially as interim analyses approach | Very important, to maintain desired balance among subgroup sizes and to ensure that sites contact participants at the appropriate intervals |

| Interoperability | Important, especially for trials that need to consume a high volume of lab and imaging data on a regular basis | Very important, as EHR data can easily account for more than half of a registry data |

| Researcher ease-of-use | Very important, to drive data entry timelines, reduce queries, and ensure quality | Critically important, for the reasons listed under RCTs, as well as to minimize collection burden and complement the flow of clinical care |

| Participant ease-of-use | Often irrelevant, otherwise critically important, depending on whether patient-reported outcomes (PRO) are collected | Often critically important, as PRO is a far more common data source for registries |

Let’s look briefly at each four of these features in turn.

Automatic reporting and notification

Registries may be observational, but make no mistake: there’s still plenty to do, especially when it comes to ensuring the internal and external validity of the study design. As with RCTs, registries begin that task before the first participant is ever enrolled. Inclusion and exclusion criteria define the patient population from which the study will draw. Enrollment targets and duration parameters are set to deliver the necessary statistical power. Data elements are selected ahead of time, as are relevant outcomes.

But RCTs wield two defenses against bias that registries do not: highly specific eligibility criteria, and randomization itself. The first defense minimizes the role confounding factors can play, while second helps ensure that the influence of confounders is balanced between comparison groups. Registries, on the other hand, because of their greater need to reflect the diversity of the real-world, cast “a wider net” with their eligibility criteria. In doing so, the room for selection bias–and confounder impact–grows. And because oversampled patient types are not randomized to one or more groups in a registry, they can distort findings more powerfully.

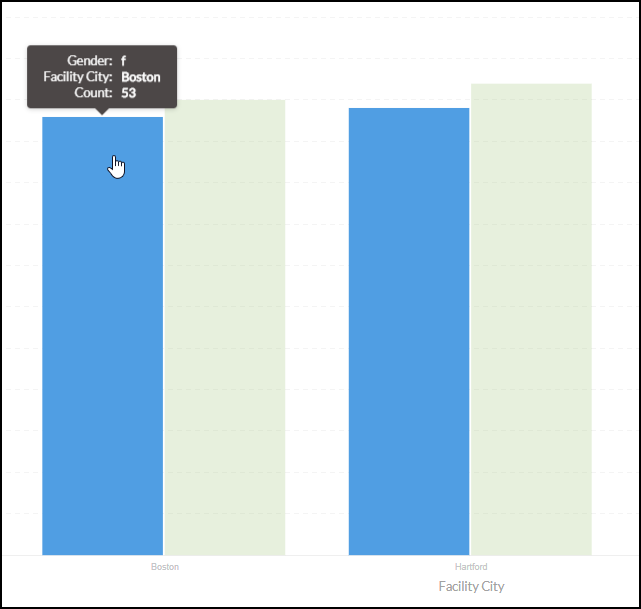

The registry data manager, then, is often engaged in a constant battle against selection bias. She has no more powerful weapon than real-time reporting, which can signal when enrollment efforts need to be retargeted.

Typically, criteria for registry enrollment aren’t as selective as they are for RCTs. That kind of wiggle room leaves the door open for selection bias. Regular, visual reporting of subgroup counts (e.g. patients of a certain race, ethnicity, sex, age, or socioeconomic status) are indispensable to maintaining a registry population that is representative of the general population with the disease, exposure, or treatment under study.

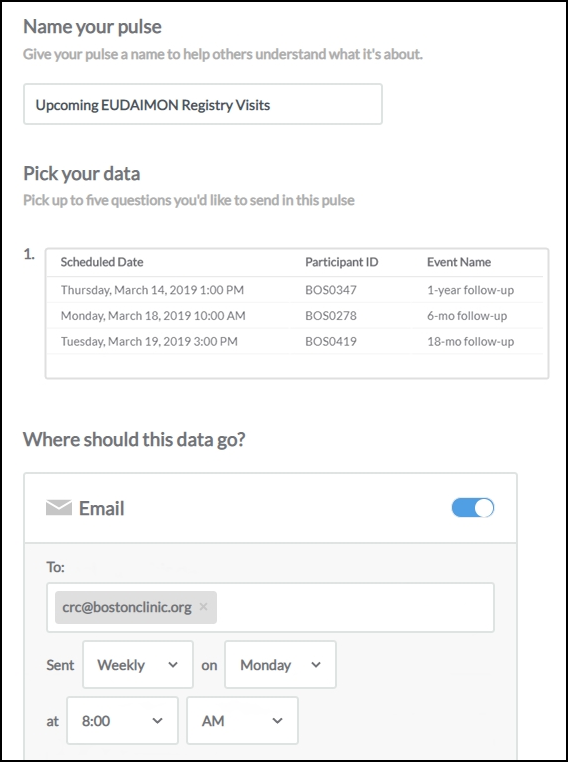

That same real-time reporting, directed now at the site, can automatically prompt CRCs to contact participants in a longitudinal study at the right intervals. Why is this important? Missed visits mean missing data, which poses two risks. The first is a failure to collect enough overall data points to achieve the desired statistical power. The second, more subtle risk pertains to whom the missing data belongs. If a certain patient subgroup is disproportionately more likely to miss visits (and therefore leave blank spaces in the final dataset), results become biased toward the subgroups who were compliant with visit schedules.

Missing data is the scourge of registries. Without consistent outreach to all participants from sites, the data collected can easily be skewed by those participants who are proactive in keeping their appointments. Give your sites helpful, regular reminders of upcoming milestones for their participants.

The takeaway? Look for a data management system that allows you to build clear, actionable reports, and to push them out automatically to sites and other stakeholders on a schedule you set.

Interoperability

The life sciences are awash with data, and yet how little of it flows smoothly from tank to tank. My blood type, and yours, is very likely recorded in a database somewhere. Yet, if either of us participates in a study where that blood type is a variable, we are almost certainly looking at a new finger prick.

The situation is poor enough for RCTs, but becomes dire with registries. Registries that don’t easily consume extant secondary data place increased burden on site staff, who are rarely reimbursed well or at all for their contribution. RCTs, on the other hand, often pay per assessment. Also unlike RCTs, registries make more frequent use of this data:

While some data in a registry are collected directly for registry purposes (primary data collection), important information also can be transferred into the registry from existing databases. Examples include demographic information from a hospital admission, discharge, and transfer system; medication use from a pharmacy database; and disease and treatment information, such as details of the coronary anatomy and percutaneous coronary intervention from a catheterization laboratory information system, electronic medical record, or medical claims databases. – Gliklich RE, Dreyer NA, Leavy MB, editors. Registries for Evaluating Patient Outcomes: A User’s Guide [Internet]. 3rd edition. Rockville (MD): Agency for Healthcare Research and Quality (US); 2014 Apr. 6, Data Sources for Registries.

Clearly, the ability to exchange data among multiple sources in a programmatic way (i.e. interoperability) is a must have for the EDC that will power your registry. Of course, unlike data storage capacity, you can’t quantify interoperability with just a number and a unit of measure. Interoperability is a technical trait that depends on more fundamental attributes:

- Data standards – Does the system “speak” an open, globally recognized language, such as CDISC?

- API services – Does the system offer clear, well-documented processes for accepting (and mapping) data that is pushed to it from external sources?

- Security – Will data that enter, leave, and reside within the system remain encrypted at all times?

Before selecting an EDC, press your prospective vendors on the questions above. Then inquire exactly how they’ll ensure safe and reliable integration between their system and all your data sources.

Researcher ease-of-use

Contributing to clinical research is, for many, its own reward. The prospect of expanding our medical knowledge and, perhaps, improving patient lives, is a powerful incentive. But it’s easy for a clinician or researcher to lose sight of these ideals in the middle of a hectic workday. When the research is long and unpaid, which is more likely to be the case for a registry than an RCT, the will to “get the work done” can quickly trump the will to do it right.

Leaders of registry operations, therefore, have an even greater responsibility than their RCT peers to keep hurdles low. That’s a wide-ranging obligation, but ensuring a frustration-free data capture experience stands at or near its center.

First, a clinical research coordinator (CRC) should meet with no obstacle the tasks of signing in to their EDC and navigating to the right participant. These are the “low bars.” Even so, they can easily trip up thick-client systems, and even web-based systems that aren’t built for performance or designed with UX (user experience) principles always front of mind.

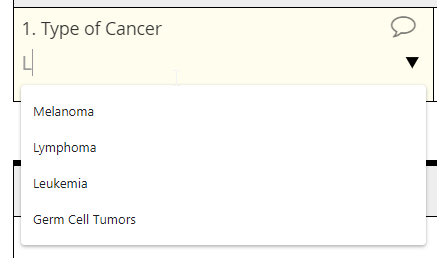

But the most important ease-of-use tests happen in the context of the case report form (eCRF). Recall that a large portion of registry data comes from clinical encounters that occur in the delivery of standard care. Think pulse oximetry, or resting heart rate. Consequently, any eCRF that can’t be completed while in the exam room ought to have you raising an eyebrow. Accept nothing less than forms that render clearly in any browser, on any device (no matter how it’s held). But that’s not all. Fields on the form need to be “smart:” appearing only when they are relevant; capable of showing specific, real-time messages when the entered value is invalid; and hanging on to input even if an internet connection is lost. Finally, these fields should “remember” and calculate for the CRC, instantly pulling in patient data from visits ago to reference in the current form, and effortlessly turning a height and weight into a BMI.

Can’t pull medical history from the EHR? Help your CRC out with fast and responsive autocomplete fields.

In short, contributing to your registry should go hand in hand with delivering excellent patient care and keeping accurate, up-to-date records. The further those drift apart, the more your registry suffers.

Participant ease-of-use

What endpoints are to RCTs, outcomes are to registries. And where there’s a concern with outcomes, there is (often) a concern with patient self-reports. Ergo, chances are high that your next registry may rely on patient-reported outcomes (PRO) as one of its data sources.

If we need to keep the barriers to data submission low for researchers, we need to keep them all but invisible to participants–while ensuring data quality. The simple paper form may appear to offer this balance. Historically, it may have done just that. But twenty years of Internet use have changed our expectations when it comes to offering personal information. Without sacrificing one bit (or byte) of security, we want the same ease in reporting aches to a physician as we find in booking a flight. We want instant “help” when we don’t understand a question, and we don’t want to be asked about matters that don’t apply to us.

Given the expectations above, a study that utilizes even a single PRO instrument can benefit from make the conversion to ePRO. Real-time edit checks, for example, re-orient the participant when their input conflicts with field requirements, without risking the influence of a human interpreter. The time and cost of transcription disappears.

When PRO takes the form of a patient diary, paper’s dirty secrets truly come into the light. Provided the paper form isn’t lost or damaged in the first place, it’s virtually impossible to tell whether a patient made daily diary entries as instructed, or retrospectively wrote responses just prior to a study visit, raising data quality concerns.

As a field, we’ve embraced ePRO for the last decade. But too many ePRO solutions don’t offer the ease or convenience they should. Many depend on provisioned devices, difficult to use and prone to malfunction. Web-based ePRO technologies are a step in the right direction. Here, too, though, industry efforts to deliver a effortless experience often fall short. Special software (such a smartphone apps) require storage space, not to mention the know-how and patience for download, installation, and activation. Along with everything else participants need to remember, is it really fair–or feasible–to add a password, browser recommendations, and “virtual check-in times” to the list?

Won’t be getting you your data anytime soon

The answer lies in allowing patients to use their own devices, be it a laptop or smartphone, and to submit their data on the browser with which they’re most comfortable. Form URLs specially encoded for each participant make passwords unnecessary, while auto-scheduled email and SMS messages provide a friendly, “just-in-time” reminder to make their report. And what better way to convey a message of collaboration with the participant than eConsent? While its role in risky, interventional trials may still be unclear, eConsent is tailor made for registries: it can deliver an interactive education on the purpose of the study, ensure comprehension with in-form quizzes, and signal to registry leaders real-time recruitment trends.

As for ePRO data collection itself, layout, question order, and response mechanism can all make the difference between valid, timely data and no data at all. The participant isn’t an amateur researcher, and won’t tolerate the kinds of screens all of us envision when we think of EMRs. Data collection should proceed from the simple to the complex, leveraging skip logic to trigger only those questions that are relevant, and using autocomplete to help with terminology. A single column layout, a conspicuous progress bar and page advance button, autosave–all of these features are crucial to treating patients like the study VIPs that they are.