Looking for another success guide? See our guides on cross-form intelligence, ePRO form design, and site performance reporting.

Looking for another success guide? See our guides on cross-form intelligence, ePRO form design, and site performance reporting.

In the months ahead, Journal for Clinical Studies will publish a detailed guide to designing eligibility forms–a guide authored by OpenClinica! The complete contents are embargoed until they appear (for free) on the journal’s website. As soon as it’s published, we’ll provide a link to it here. In the meantime, here’s a brief excerpt and an interactive form illustrating one of the guide’s four core principles, “Make your forms carry out the logic.”

from The Four Criteria of a Perfect Eligibility Form: A Success Guide, forthcoming in Journal for Clinical Studies

Think a moment about the human brain. Specifically, think about its capacity to carry out any logical deduction without flaw, time and again, against a background of distractions, and even urgent medical issues.

It doesn’t have the best track record.

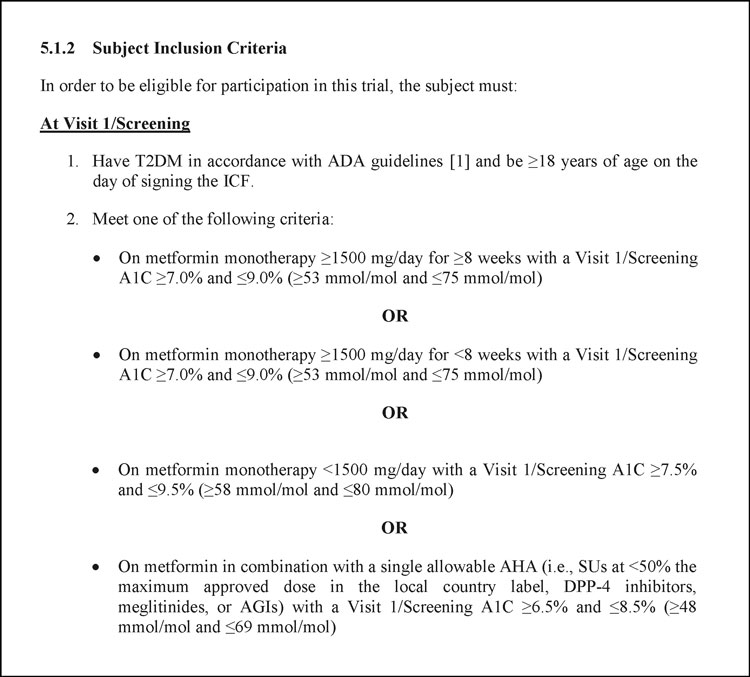

Even the most logical research coordinator could benefit from an aid that parses all of the and’s, or’s, and not’s scattered throughout your study’s eligibility. A good form serves as that aid. Consider the following inclusion criteria, taken from a protocol published on clinicaltrials.gov.

Inclusion criteria #1 is straightforward enough. (Although even there, two criteria are compounded into one.) By contrast, there are countless ways of meeting, or missing, criteria #2. It’s easy to imagine a busy CRC mistaking some combination of metformin dose and A1C level as qualifying, when in fact it isn’t.

But computing devices don’t make these sorts of errors. All the software needs from a data manager is the right logical expression (e.g., Criteria #2 is met if and only if A and B are both true, OR C and D are both true, etc.) Once that’s in place, the CRC can depend on your form to deliver perfect judgment every time. Best of all, that statement can live under the surface of your form. All the CRC needs to do is provide the input that corresponds to A, B, C, and D. The form then chops the logic instantly, invisibly, and flawlessly.